…so that online workshop reaches to a truly meaningful, shared conclusion

Year

2021

Overview

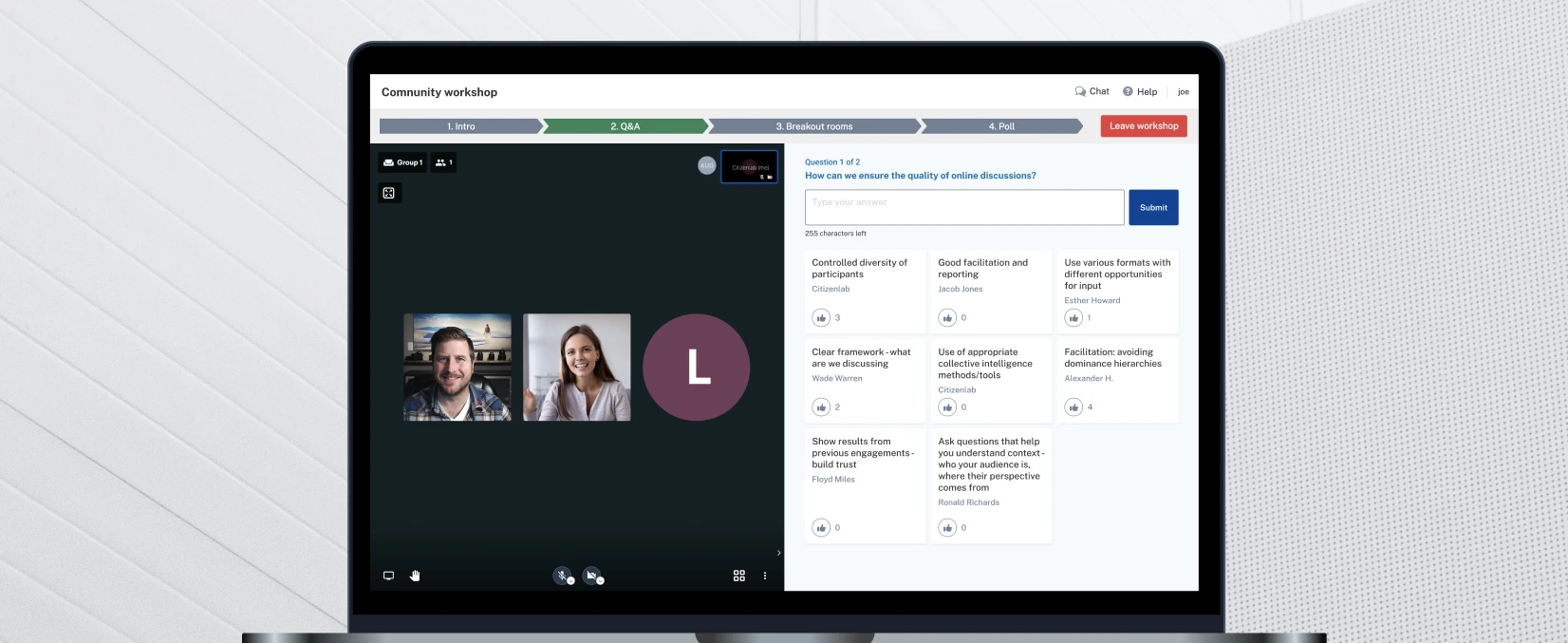

Due to Covid-19, more and more communities have begun to give a shot at online workshops. The online workshop has a lot of limitations in nature compared to real-life ones, but it also has benefits due to its digital environment. To bring more people into meaningful participation in this difficult time, the team aimed to maximise the benefits of online workshops by expanding features.

Client

CitizenLab is a community engagement platform used by local governments and organisations to engage their community members. The company offers engagement tools such as surveys, ideation, participatory budgeting, online workshops, and input analysis.

My role

As a freelancer designer, I joined Online Workshop squad that consists of 1 product manager and 2 developers. With the help of a mentor designer, I led the process of ideation, wireframing, user interview, prototyping and testing. I was initially hired for 4 weeks, and I got offered for a full-time position as a Product Designer.

Design Process

1

Understand

"What is the problem?"

Problem explanation meetings

2

Explore

“What are the possible solutions for us?”

Wireframe, wireframe, and wireframe

3

Evaluate

“What is the best solution?”

Team discussions, user interviews, polling, and scorecards

4

Design

“How to make the features as usable as possible?”

Prototyping, user testing, support through launch

01. Understand

The Problem

“I can collect input cards, talk about it with participants, and write a conclusion paragraph; but I am still in need of more tools to effectively evaluate input and reach to a conclusion. More specifically, I want to select those inputs we want to discuss as a group, or find bigger themes and patterns in the collected inputs.”

— Workshop facilitator

“Not everyone feels sufficiently comfortable to give their reaction to input in the live video chat, which leads to suboptimal groups in terms of diversity. The current voting method (upvoting, aka “Like”) is too few options for them to express their opinion in a more nuanced way.”

— Workshop participant

What is actually happening in online workshops?

The biggest chunk of the community workshops follows the diamond structure (41%) where they gather input from residents (diverge) and reach a shared conclusion (converge). The rest was information sharing (39%), poll (9%), and loose discussion (5%).

From the interviews with users, the team found that user’s pain-points are mostly on ‘converge’ part, as there were limited functionalities to evaluate different inputs and structure collected inputs.

02. Diverge

What are the possible solutions for facilitating discussions?

Focusing on diamond-structured discussions, I listed up methods for opinion gathering and structuring such as voting, comment & reaction, and structuring input. Checking what other digital products (i.e. Miro, Whimsical) were doing to aid remote team discussions helped me with ideation.

Focusing on diamond-structured discussions, I listed up methods for opinion gathering and structuring such as voting, comment & reaction, and structuring input. Checking what other digital products (i.e. Miro, Whimsical) were doing to aid remote team discussions helped me with ideation.

Wireframe, wireframe, wireframe.

I short-listed the possible solutions asking these questions and made them into wireframes:

Effectiveness: Does it effectively do its job of assessing/structuring inputs?

Easy participation: Is it simple and easy enough for everyone, including senior people with little digital experience?

Workshop context: Does it work well with the setup of online workshop, where multiple people are talking on the video chat?

03. Evaluate

Team discussion

What I liked the most about the project was that the whole team was closely engaged during the whole design process. With the help of senior designer and product manager, I brought a set of wireframes to a team discussion several times and talk to colleagues who have more experience with users. Getting feedbacks from developers at an early stage was important to learn about realistic constraints.

User interviews & polling

A poll was sent out to the current users for a temperature check, which helped to narrow down to a few solutions and collecting pros/cons.

I also took a whole day to do rounds of 20-minute interviews with actual facilitators, where I verified big and small assumptions before adding UI style.

Scorecards

To select a method that fits our context the best, I made a scorecard to assess how each method works in different scenarios.

For example, I short-listed 3 methods for structuring input and specified potential scenarios. Then I scored each method with a scale of 1-5. Through a team discussion we sorted out which context has the most weight, and this made the decision way easier.

04. Converge

Why dot voting?

Quality engagement

Through interviews, many facilitators shared their positive experiences on dot voting: Participants were proactively engaged to distribute their votes, and overall the method has a good balance of fun and effectiveness.

Suitable for focused, separate voting session

Unlike up/down voting, dot voting is one of the methods that need more concentration and therefore is suitable for a separate voting session with limited time. This will enable participants to vote without knowing what others are doing, which will lead to unbiased results.

Weighted votes

Participants can make weighted choices by casting multiple dots on a single input card.

Discussion points_Dot voting

Effective facilitation: How facilitators monitor the progress

Facilitators want to check who started the voting and who didn’t, to ensure that no one is facing technical issues. Also, they want to see how many votes are already cast, to decide when to stop the voting session. After many iterations I decided to put two small graphs showing how many people had started voting, and how many dots had been cast.

Draft of Facilitator’s view

Voting integrity: How far should we open the voting progress/results?

It was tempting to open all the voting results, including who voted what, to the facilitators. Some facilitators pointed out that this will help them to ask questions and lead conversations. However, I took participant’s perspective for this matter and decided to keep the voting completely secret, so no one can access to this data. Only the number of dots and people voted will be open to everyone including all participants.

Also, several colleagues asked me to show what other people are voting on real-time in order to make it as similar as possible to the offline voting sessions. However, I kept everything in silence during voting session so that no one gets affected by what others are voting on: I chose to maximise the benefit of online workshop: real-time, but not physically together.

Usability: Which direction to go?

I ran small usability tests to make sure the minor interactions are optimal. For example, should the remaining votes disappear from the right or from the left?

I thought the first version felt natural to me, but it turns out for most people expected it to work as in the second version.

Or, when casting multiple votes on one card, should a user move their cursor to do it or keep the cursor at the same place?

Several colleagues pointed out that the second version is easier to cast multiple votes, but I insisted to go with the first version as it resembles the real-life dot voting more (putting stickers next to each other). Also, the whole purpose of dot voting does not lie on casting multiple votes to a single option, but to carefully distribute the votes - that’s why I didn’t want to lead participants too much to cast multiple votes.

Why tagging?

Suitable for small datasets

The average number of inputs per workshop is 20, and tagging is the best method for small datasets.

Space constraints

Even though other advanced methods had more benefit in many aspects, the team decided to keep the video area to be 50% to provide consistent and stable experience. Tagging module works with the space constraint the best.

Feasibility

Tagging module doesn’t require a completely new structure like Kanban board so everyone in the team was happy about starting from the simplest method.

Discussion points_Tagging

What is MVP?

There was not enough time for developing tagging module to start with, and the team decided to ‘get it out there as early as possible and see how it goes.’ Many of the small features discussed (i.e. multiple tagging, tag management, …) were cancelled, and I focused on designing minimum but usable tagging feature.

For example, I explored several options for ‘sort by tags’ to best present grouped inputs after some tagging is done. But later, I decided to simply change the card order for fast implement. As I wasn’t sure about how to sort the cards, I ran a small user test to see what is generally expected: bigger tag comes first, then alphabetical order.

How to add tagging module along with dot voting onto the current structure?

I had 2 options to choose from:

Enable ‘add a tag’ always: the menu sits with ‘delete’ and ‘edit’ on every input card

Enable ‘add a tag’ only when facilitator plans it beforehand

This was a bit of a headache at the beginning as both of them felt right but has a very different structure to one another. I decided to go with option 2 in regards of scalability. The team was planning to build more input evaluation and structure modules in the near future, so it would be easier to keep adding new modules if they are individual items in the room setting menu.

I decided to treat both tagging and dot voting module as part of ‘input development’ options: after collecting inputs, a facilitator can choose to 1) evaluate (via upvoting and dot voting) or 2) structure (via tagging) to develop those inputs into a conclusion. And the team is planning to add more modules into these input development options in the near future.

Final design_Dot voting

One action at a time

The tool must be inclusive of all citizens of all digital proficiencies. Not everyone is familiar with dot voting. So, one desired action for each step is clearly announced in the box.

Progress monitoring

Once a session begins, facilitators can see how many people started voting to see if everything works well from participants’ side. They can choose when to end the session based on progress.

Unbiased results

Participants can’t see what others are voting on during the voting session. The voting results include both the number of votes and number of voters to best reflect the group’s opinion.

Customisability

Facilitators can set their own prompt to clearly specify the purpose of voting. They can also set a number of votes per person.

View my votes

During the voting session, 'view my votes’ button filters out the cards a user had cast votes on. This will come in handy when there are a large set of cards.

Final design_Tagging

Easy edit/delete

Simply hover on the tag, and you can edit or delete it quickly during the real-time workshop.

Sort by tags

After some tagging, you can choose to sort by tags to capture the patterns and trends of the inputs. Tags with more number of inputs linked to it will come first.

Mix with dot voting

Facilitators can choose to have both dot voting and tagging session on the same set of cards. The result of each session will be added on the cards.

The Result

The two features were launched on time, and tagging module reached the adoption rate of 19%. What was happier was that I got offered for a longer contract from the company after completing this project.

I learned what my role as a UX designer in a company is, and how to work with developers from early stages. Capturing insights from user interviews and testing sessions was a satisfying experience.

That's it! Thanks.

© 2023 Made by Yaesul